Tree of Trust — On-Device AI Therapist

Built a privacy-first mental health companion using Gemma 3N, running entirely on-device with zero cloud connectivity.

Key Results

The Problem

People want to use AI for deeply personal conversations—mental health support, journaling, working through difficult emotions—but cloud-based AI creates an impossible tradeoff: share your most vulnerable thoughts with distant servers, or don't use AI at all.

The trust gap

- Every major tech company has suffered data breaches exposing billions of records

- Cloud AI logs everything — your prompts become training data

- Privacy policies change — today's promise is tomorrow's liability

- Mental health data is especially sensitive — stigma, insurance implications, legal exposure

Users are justifiably paranoid. And they should be.

The Intervention

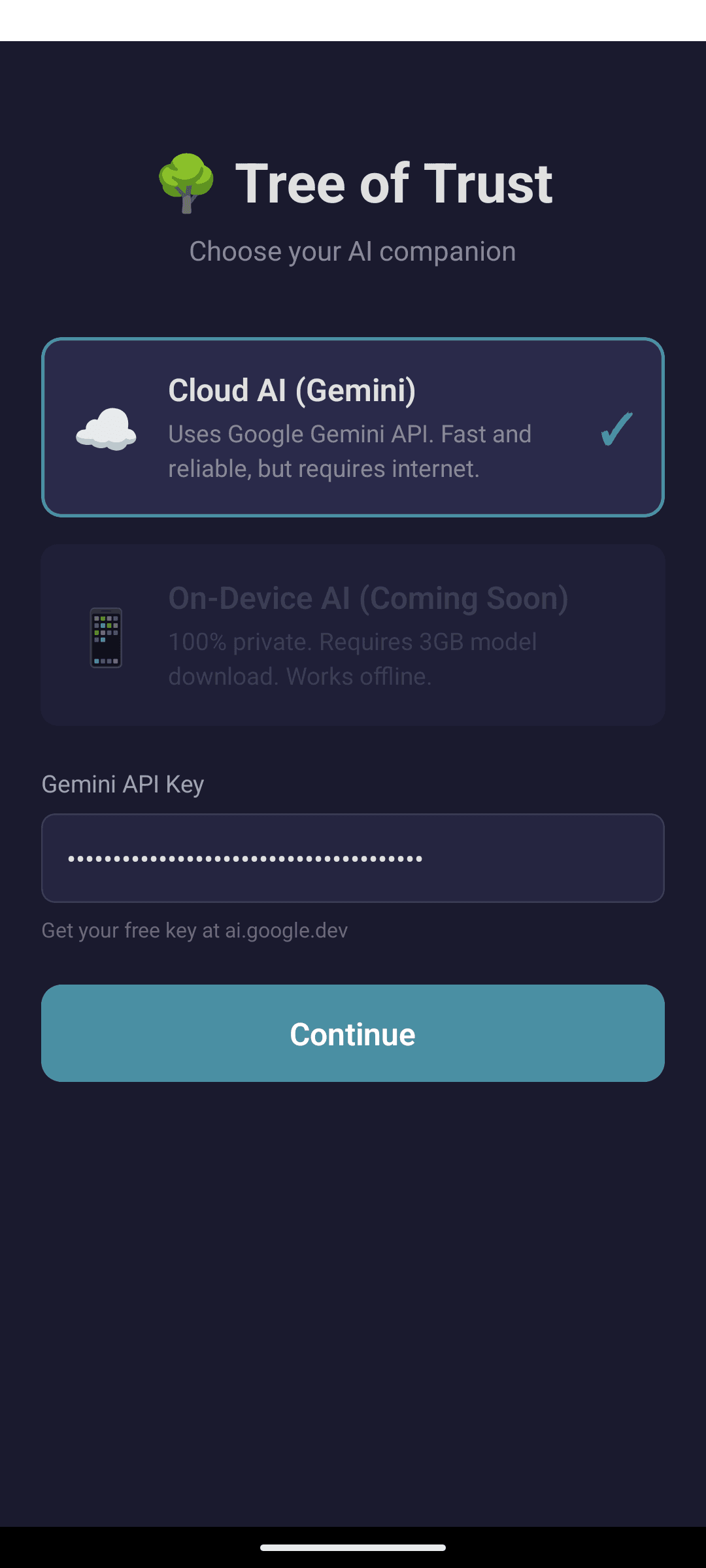

For the Kaggle Gemma 3N Impact Challenge, we built Tree of Trust — a mobile app that runs a full LLM entirely on the user's phone.

Core architecture

┌─────────────────────────────────────────────┐

│ User's Phone │

├─────────────────────────────────────────────┤

│ React Native App │

│ ├── Chat UI (messages, input, voice) │

│ ├── Zustand Store (local persistence) │

│ └── GemmaBridge (native module) │

│ │ │

│ ▼ │

│ MediaPipe LLM Runtime │

│ └── Gemma 3N Model (2.9GB, int4) │

├─────────────────────────────────────────────┤

│ ❌ NO network calls │

│ ❌ NO cloud APIs │

│ ❌ NO telemetry │

└─────────────────────────────────────────────┘

The privacy guarantee

Airplane mode works perfectly. After initial setup, the app never needs internet access. There's nothing to hack because there's no server. Your thoughts exist only on your device.

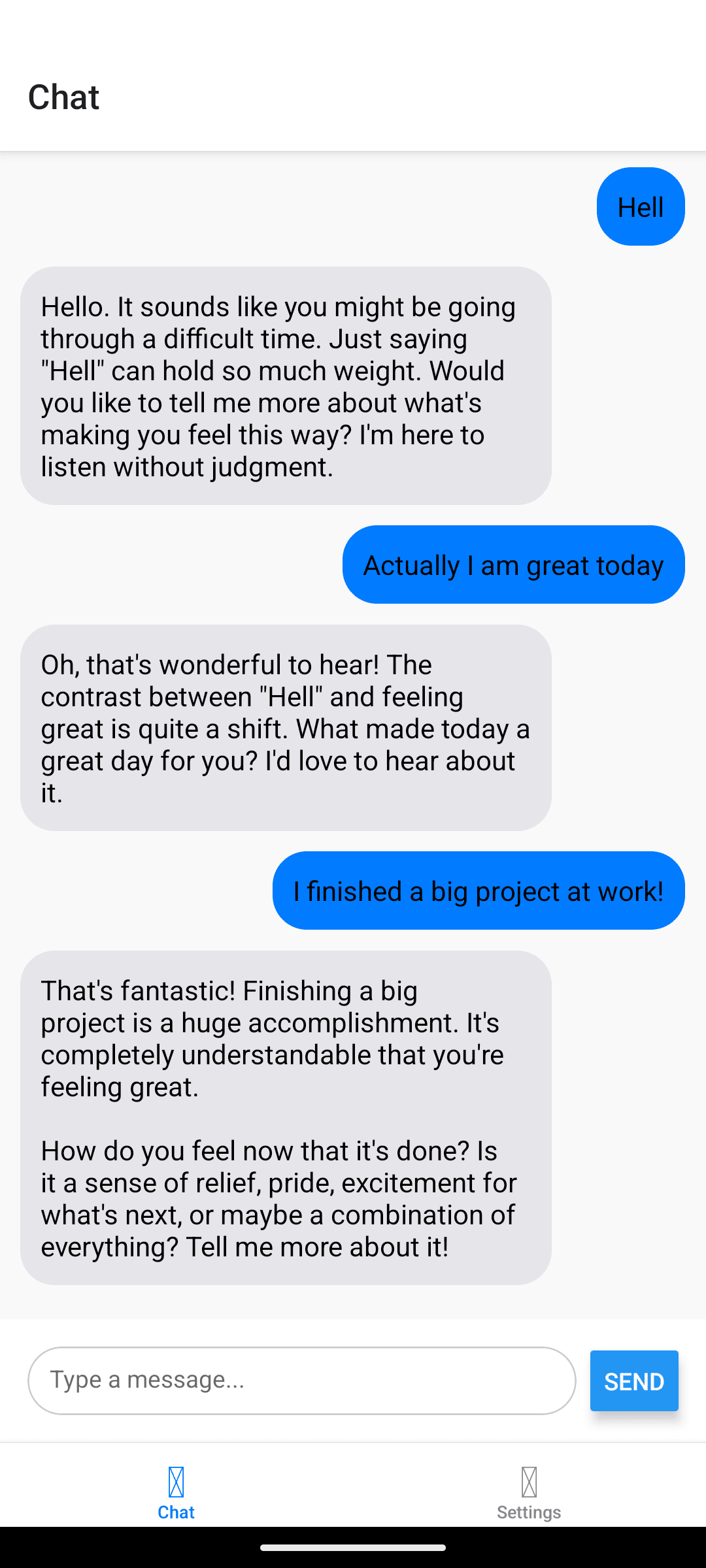

Therapeutic AI design

We crafted a system prompt for empathetic, supportive conversation:

- Active listening and validation

- Thoughtful follow-up questions

- Healthy coping strategies

- Clear boundaries (not a replacement for professional care)

- Gentle encouragement toward professional support when appropriate

Technical Challenges

The 2.9GB problem

Bundling a 2.9GB model into a mobile app isn't trivial:

- Local builds failed — Android Gradle ran out of memory processing the model file

- Asset bundling quirks — The

.taskfile wasn't being included in the APK - Compression conflicts — Android was trying to compress an already-compressed model

Solution: EAS Build (Expo's cloud build service) handles large assets properly on high-memory servers. Free tier works fine for personal projects.

Native bridge

React Native can't directly access MediaPipe's LLM inference. We built a native bridge:

// GemmaBridge/js/index.ts

export const loadModel = (useGPU: boolean): Promise<boolean> =>

Native.loadModel(useGPU);

export const generateResponse = (prompt: string): Promise<string> =>

Native.generateResponse(prompt);

export const isModelLoaded = (): Promise<boolean> =>

Native.isModelLoaded();

Context management

On-device models have limited context windows. We implemented conversation memory that:

- Includes recent messages for context

- Prepends the therapeutic system prompt

- Cleans up response formatting

The Outcome

What we shipped

- Complete chat interface — Message bubbles, input, loading states

- Model loading UX — Progress indicators, error handling

- Persistent history — Conversations saved locally via AsyncStorage

- Therapeutic prompting — Warm, supportive AI personality

- Privacy by design — Zero network calls after setup

Demo

Open source

The complete source code is available: github.com/Moes-AI/gemma-3n-impact-challenge

What This Proves

Privacy and AI aren't mutually exclusive. With the right architecture:

- Models can run entirely on-device

- No data needs to leave the user's phone

- The UX can still be smooth and responsive

- Building this is accessible to solo developers

The future of personal AI isn't in the cloud — it's in your pocket.

Future Possibilities

- Voice input — On-device speech recognition (Whisper)

- iOS support — MediaPipe works on iOS too

- Mood tracking — Local analytics without cloud sync

- Export/backup — Encrypted, user-controlled

- Custom personas — Different therapeutic styles