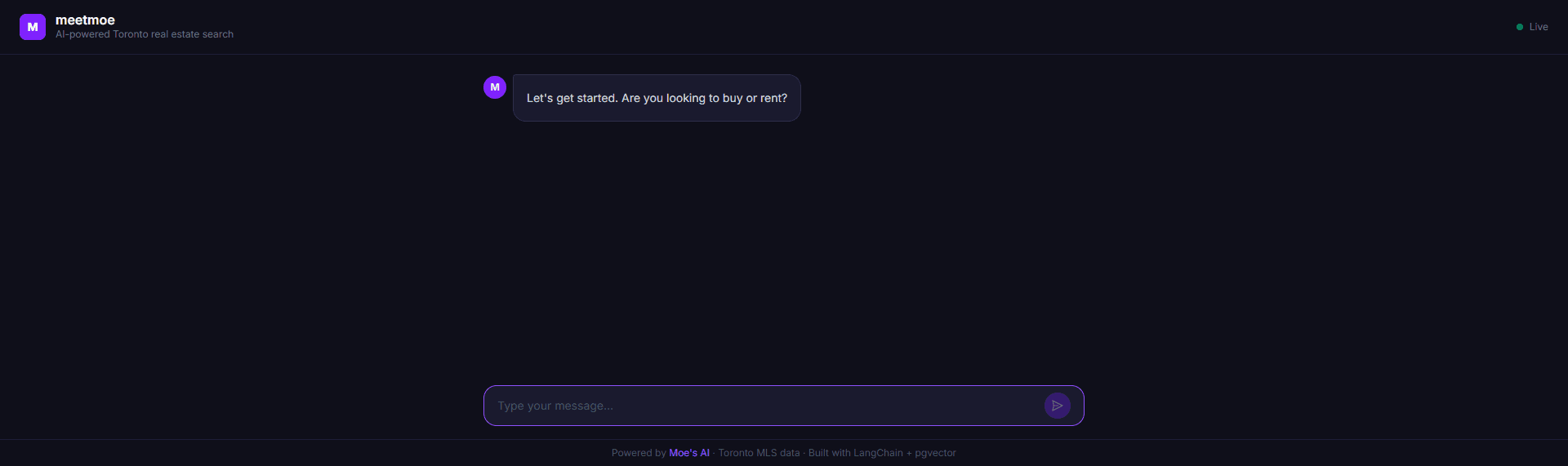

meetmoe — AI Realtor Chatbot (Built 2024)

An AI-powered chatbot that replaces search filters with natural conversation, surfacing Toronto MLS listings through a guided Q&A flow backed by LangChain + pgvector.

Key Results

The Problem

Real estate search is broken. Every platform dumps you into a grid of filters — price sliders, bedroom dropdowns, neighbourhood checkboxes — before you've even decided what you want. The result: analysis paralysis, missed listings, and a frustrating UX that hasn't changed in a decade.

The gap

- Filter-first UX — Forces decisions before users know what to ask

- No context awareness — Can't adapt to vague or evolving preferences

- Data dead-ends — Narrow filters miss semantically relevant listings

- Mobile hostile — Dense filter UIs break on small screens

The Build

We built meetmoe in summer 2024 in collaboration with a Toronto realtor — an AI-first chatbot for the Toronto condo market, integrated with a live TRREB MLS data feed (vendor-approved access).

The idea

Instead of filters, meet a broker. The bot asks exactly the questions a good realtor would — intent, budget, neighbourhood, bedrooms, amenities — and retrieves relevant listings through a semantic RAG pipeline, not a keyword search.

Architecture

Backend (FastAPI + Python)

- LangChain orchestrates the conversation state — each user turn updates a

UserInfoobject tracking purpose, location, price ceiling, bedrooms, and amenities - OpenAI GPT-4 drives the info extraction chain (structured JSON output via Pydantic) and the final property response chain

- pgvector on Supabase stores embeddings of MLS listings; property retrieval uses cosine similarity against the user's preference description

- Session management — Chats persist to Supabase between requests, enabling cold-start recovery

Frontend (Flutter → Next.js)

- Originally shipped as a Flutter mobile app

- Re-deployed in 2026 as a Next.js web chat UI with the same backend

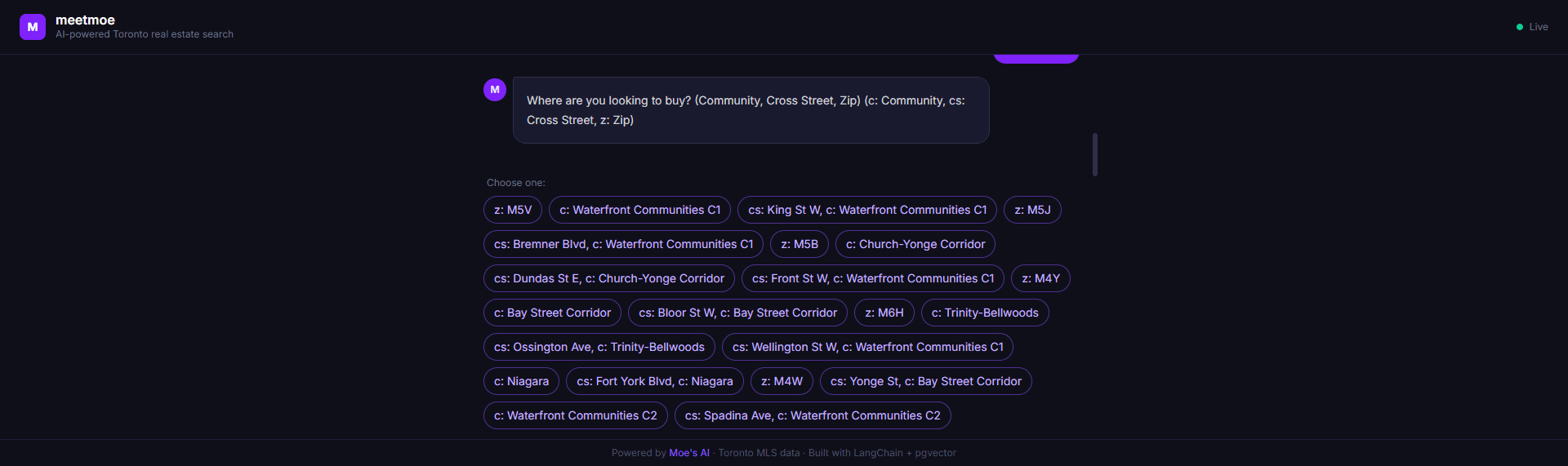

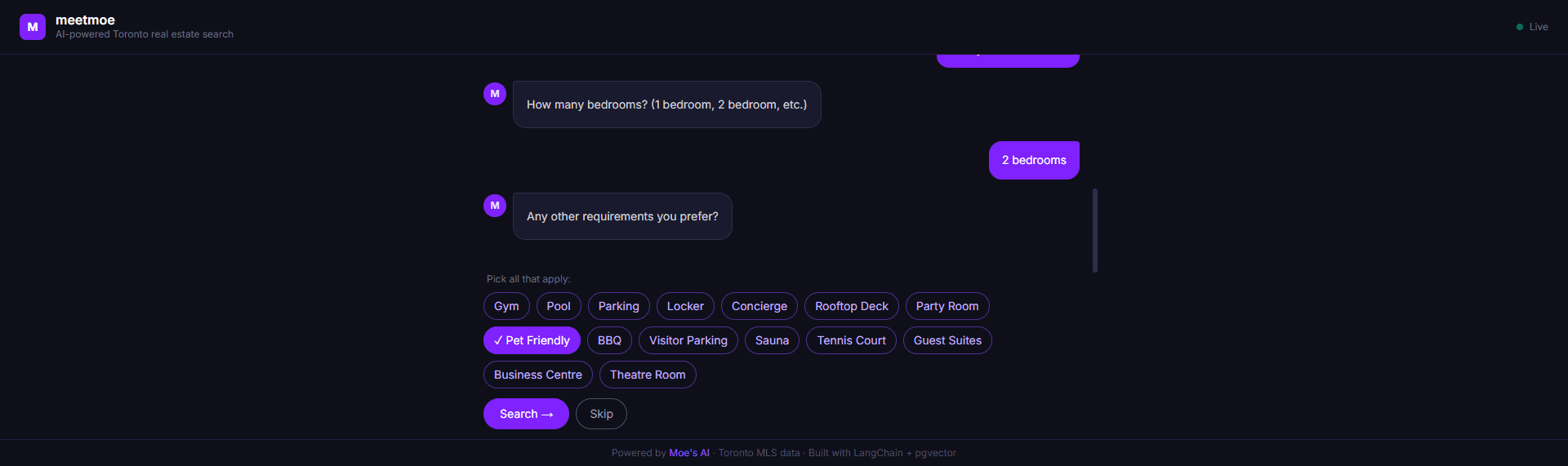

- Three input modes: free text, single-select pills (location), multi-select chips (amenities)

- Markdown table rendering for property results

Conversation flow

Bot: Are you looking to buy or rent?

User: buy

Bot: What's your buying budget in $CAD?

User: 700k-800k

Bot: Where are you looking? [pills: Waterfront Communities C1, Bay Street Corridor, ...]

User: [taps] Waterfront Communities C1

Bot: How many bedrooms?

User: 1 bedroom

Bot: Any other requirements? [chips: Gym, Pool, Pet Friendly, Locker, ...]

User: [taps] Pet Friendly → Search →

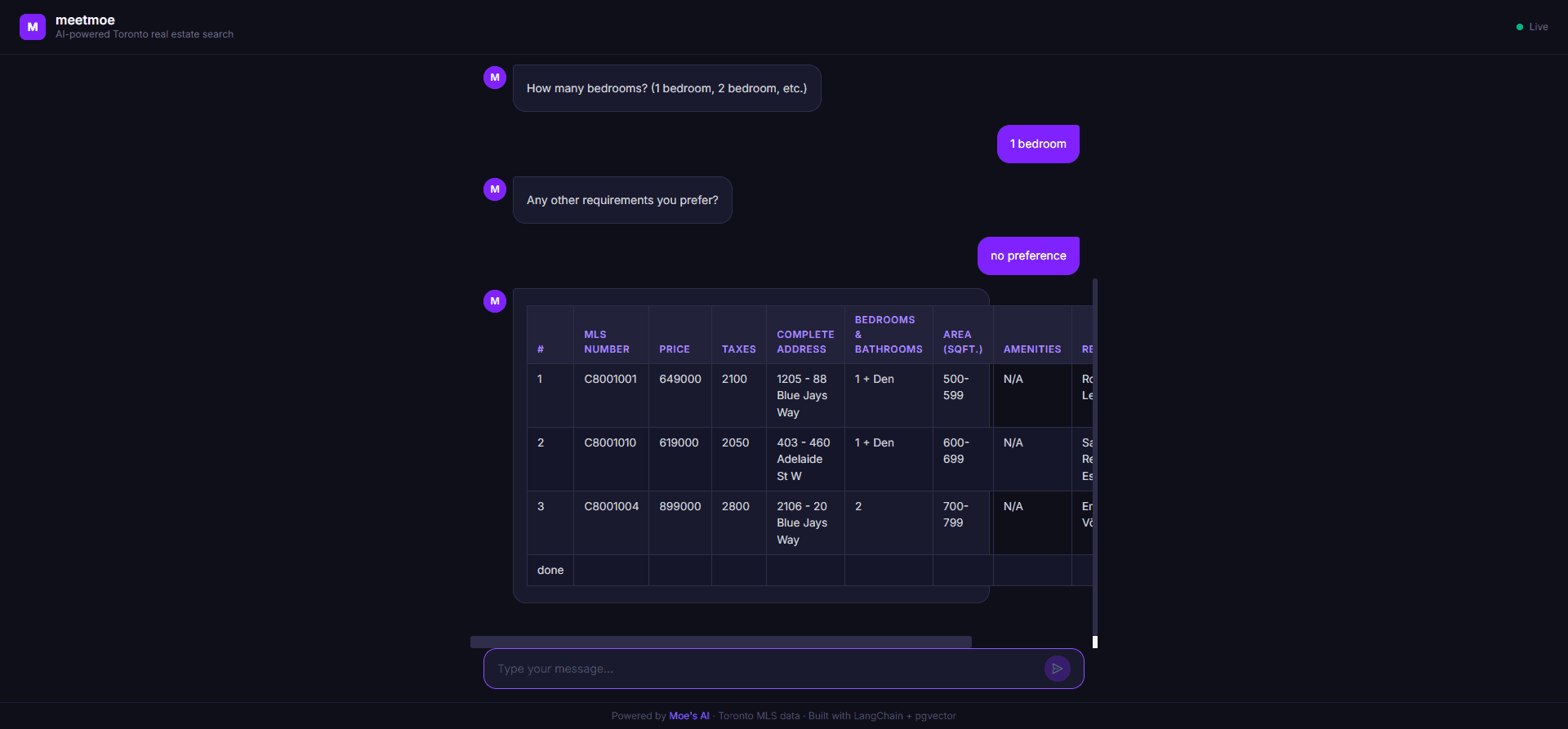

Bot: [returns markdown table of matching listings]

RAG pipeline

Each MLS listing is chunked and embedded at ingest time. At query time:

- User preferences are compiled into a natural language description

- That description is embedded and similarity-searched against the

documentstable - Top-k results are injected into the chat context

- GPT-4 renders a structured markdown table with the matching listings

This gives results that are semantically aware — a user who says "spacious 1-bedroom near the lake" finds relevant listings even if they never said "Waterfront" or specified square footage.

The Outcome

Shipped in ~6 weeks:

- Conversational UX — Zero filters; full intent captured through dialogue

- Live MLS data — TRREB feed with real Toronto condo listings

- Multi-modal input — Free text, pills, multi-select chips based on question type

- Persistent sessions — Users can drop and resume without losing context

- Flutter app + web — Single backend serving both surfaces

What this proves

LLM-powered information extraction is a better UX than form-based filtering for high-consideration purchases. A user who types "something near the waterfront, pet-friendly, under 700k" gets better results than one who laboriously sets six filter dropdowns — and gets them faster.

meetmoe is live at meetmoe.ai — try the full conversation flow.

Tech Stack

LangChain OpenAI GPT-4 pgvector Supabase FastAPI Python Flutter Next.js Google Cloud Run